Certified Professional in AI Security (CAIS)

The Certified Professional in AI Security and Machine Learning Defense (CAIS) is an intensive 2-month program that transforms cybersecurity professionals into AI security specialists capable of defending against sophisticated adversarial attacks.

You’ll master both offensive red team techniques and defensive blue team strategies specific to AI/ML systems, from adversarial attacks and model poisoning to runtime monitoring and privacy-enhancing technologies.

Download Brochure

×You’ve taken the first step toward mastering applied AI and intelligent systems.

Review the program details carefully—our cohorts are selective and designed for committed builders and leaders that can shape the future of AI.

When ready, we encourage you to apply early to secure your place.

By Submitting the form, you agree to our Terms and Conditions and our Privacy Policy.

Key Features of the Program

Heisenberg Institute CAIS Professional Certification

Industry-recognized credential in AI/ML cybersecurity

Offensive AI Security Masterclass

Hands-on adversarial attack techniques and red team methodologies

World-Class Faculty

Learn from former AI security researchers and penetration testing specialists

Practical Attack Portfolio

Execute simulated adversarial attacks and build defensive frameworks

Industry-Standard Tools Mastery

Hands-on experience with Adversarial Robustness Toolbox (ART), CleverHans, Foolbox, ModelScan, and AI security frameworks

CTF and Red Team Training

Participate in AI-focused Capture The Flag competitions and red team exercises

Career Acceleration Support

Dedicated career services for highly specialized AI security roles

Cutting-Edge Curriculum

Stay current with the latest AI security risks including strong data validation, model security, and ethical AI practices

15X Higher Practical Application

Unique hands-on program with adversarial attack simulation and defense implementation

Flexible Learning Options

Live online masterclasses on Friday’s and Saturday’s with recorded sessions and virtual lab access

WHO IS THIS PROGRAM FOR

CYBERSECURITY PROFESSIONALS

-

Security Engineers Specializing in AI/ML Defense

-

Penetration Testers Expanding to AI Attack Vectors

-

Security Analysts Adding AI Threat Intelligence

-

Incident Responders Handling AI Security Breaches

TECHNICAL & LEADERSHIP ROLES

-

Security Architects Designing AI Security Strategies

-

CISO Teams Building Enterprise AI Defenses

-

MLOps Engineers Securing ML Pipelines

-

AI Developers Building Inherently Secure Systems

ADVANCED SPECIALISTS

-

Red Team Specialists Targeting AI Systems

-

Blue Team Defenders Protecting ML Models

-

Government & Defense Security Professionals

-

Threat Researchers Studying AI Vulnerabilities

WHY HEISENBERG

50+

Partners across Universities, Research Institutes and AI Companies

Access unparalleled opportunities through our extensive network spanning academia and industry, providing diverse perspectives and real-world connections throughout your learning journey.

12

Weeks of Internship training at AI Partner Companies

Apply classroom knowledge immediately in structured internships with leading AI companies, where you’ll solve actual business challenges alongside industry professionals.

18

industry experts contributing to curriculum design and mentorship

Learn directly from AI practitioners who’ve implemented solutions at scale. Our experts shape curriculum content and provide personalized guidance throughout your training.

3x

faster skill acquisition rate compared to other training entities

Our immersive methodology accelerates learning through guided practice and immediate application, helping you master complex AI concepts and implementations in record time.

24/7

access to learning resources and support community

Never hit a roadblock with our always-available learning platform and vibrant community of peers, mentors, and alumni ready to help anytime.

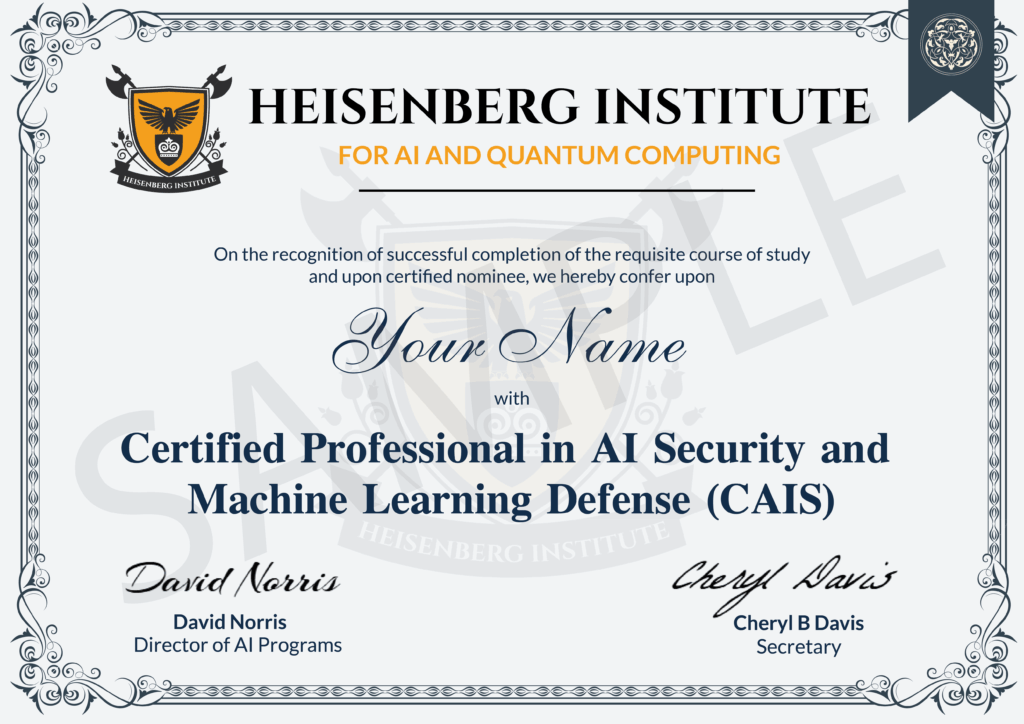

Certification

Upon successful completion of the program, participants will receive the Certified Professional in AI Security and Machine Learning Defense (CAIS) credential from the Heisenberg Institute for AI and Quantum Computing

-

Professional Credential: validating hands-on expertise in adversarial attacks, model hardening, and AI threat defense

-

Elite Career Positioning: for specialized roles as AI Security Engineers, ML Security Architects, and Red Team Specialists

LEARNING PATH

The program is structured into three distinct phases to ensure a seamless learning journey.

Phase 1 (Week 1-2)

Foundations of AI Security & Threat Modeling

-

ML Lifecycle Security Framework: Vulnerability identification across data collection, model training, deployment, and inference phases.

-

AI Threat Taxonomy: Comprehensive classification of attacks targeting integrity, confidentiality, availability, and robustness.

-

Risk Assessment Methodologies: Enterprise-level AI security risk evaluation and threat modeling.

-

AI Security Tool Ecosystem: Hands-on introduction to ART, CleverHans, Foolbox, and testing environments.

-

Responsible AI Security Integration: Ethical considerations as security enablers in ML systems.

-

Threat Intelligence for AI Systems: Understanding attacker motivations, capabilities, and attack surfaces.

Phase 2 (Weeks 3-5)

Offensive AI Security - Red Team Techniques

-

Adversarial Evasion Attacks: Execute FGSM, C&W, PGD, and advanced perturbation techniques on ML models.

-

Data Poisoning & Backdoor Attacks: Training data manipulation and trojan implementation methodologies.

-

Model Extraction & Inversion: Intellectual property theft and data reconstruction attack techniques.

-

Generative AI Attacks: Prompt injection, jailbreaking, and LLM manipulation strategies.

-

Membership Inference Attacks: Privacy violation through training data extraction techniques.

-

Custom Attack Development: Building novel adversarial techniques for specific target architectures.

-

AI Penetration Testing: Systematic approach to ML system security assessment and vulnerability analysis.

.

Phase 3 (Weeks 6-8)

Defensive Strategies & Model Hardening

-

Adversarial Defense Techniques: Adversarial training, gradient masking, and input transformation for model resilience.

-

Data Security & Validation: Anomaly detection, source verification, and feature store protection systems.

-

Model IP Protection: Watermarking, fingerprinting, and unauthorized usage detection mechanisms.

-

Runtime Security Monitoring: Baseline establishment, drift detection, and anomaly alerting for production AI.

-

Secure Inference Architecture: API security, container hardening, and secrets management for ML systems.

-

Privacy-Enhancing Technologies: Differential privacy, federated learning, and homomorphic encryption implementation.

-

AI Incident Response: Playbooks for adversarial attacks, model failures, and data breach scenarios.

Program Eligibility Requirements

This is a highly selective program with only 20 candidates accepted per cohort through a rigorous evaluation process. Applications are reviewed holistically, considering motivation, and potential for growth in the AI field. Given the limited seats available, early application is strongly encouraged

Selection Criteria

-

Statement of purpose demonstrating genuine interest in AI and career goals

-

5+ years of experience in Information Security or related field (OR)

-

A bachelor’s degree or final year (undergraduate or postgraduate students) with an average of 50% or higher marks in Cybersecurity or related field (OR)

-

Candidates certified in CISA, CISSP, CISM certification

-

Personal interview assessing motivation, learning ability, and cultural fit

Cohort Commencement Dates

The CAIG program admits a limited number of candidates per cohort. Review the intake schedule below and apply early to secure your seat in the next session.

| Cohort | Application Closes | Program Starts |

|---|---|---|

| Winter Cohort | December 15, 2025 | January 15, 2026 |

| Spring Cohort | March 15, 2026 | April 15, 2026 |

| Summer Cohort | June 15, 2026 | July 15, 2026 |

| Fall Cohort | September 15, 2026 | October 15, 2026 |

Note:

Applicants are encouraged to apply 6–8 weeks prior to the deadline.

Full Program Fee: USD 1,499

Invest in elite AI security expertise with an intensive certification combining offensive red team techniques and defensive strategies that positions you at the forefront of the most critical cybersecurity specialization

Your Value Includes:

-

Certified Professional in AI Security and Machine Learning Defense (CAIS) — industry-recognized credential awarded by the Heisenberg Institute for AI and Quantum Computing

-

Offensive & Defensive Security Masterclasses — live expert-led training in adversarial attacks, model poisoning, and ML system defense strategies

-

Real-World Attack Simulation Portfolio — hands-on experience executing penetration tests and building security frameworks for AI/ML systems

Our Flagship Courses

Quick Links

- 1207 Delaware Ave #2684, Wilmington, DE 19806

- [email protected]

- [email protected]

- +1 929 828 7556

Privacy Preference Center

When you visit any website, it may store or retrieve information on your browser, mostly in the form of cookies. This information might be about you, your preferences or your device and is mostly used to make the site work as you expect it to. The information does not usually directly identify you, but it can give you a more personalized web experience. Because we respect your right to privacy, you can choose not to allow some types of cookies. Click on the different category headings to find out more and change our default settings. However, blocking some types of cookies may impact your experience of the site and the services we are able to offer.

Manage Consent Preferences

These cookies enable the website to provide enhanced functionality and personalisation. They may be set by us or by third party providers whose services we have added to our pages. If you do not allow these cookies then some or all of these services may not function properly.

These cookies are necessary for the website to function and cannot be switched off in our systems. They are usually only set in response to actions made by you which amount to a request for services, such as setting your privacy preferences, logging in or filling in forms. You can set your browser to block or alert you about these cookies, but some parts of the site will not then work.

These cookies allow us to count visits and traffic sources so we can measure and improve the performance of our site. They help us to know which pages are the most and least popular and see how visitors move around the site. All information these cookies collect is aggregated and therefore anonymous.

These cookies may be set through our site by our advertising partners. They may be used by those companies to build a profile of your interests and show you relevant adverts on other sites. They do not store directly personal information, but are based on uniquely identifying your browser and internet device.